Breif defination of Cache Memory ?

Cache memory gives faster data storage and access by storing instances of programs and data routinely accessed by the processor. Subsequently, when a processor demands data that as of now has a case in the cache memory, it doesn't have to go to the primary memory or the hard circle to get the data.

Cache memory can be primary or secondary cache memory, with primary cache memory directly integrated into (or nearest to) the processor. Notwithstanding equipment based cache, cache memory likewise can be a circle cache, where a held portion on a plate stores and gives access to every now and again accessed data/applications from the plate.

What Is Computer ? Basic To Advanced ...

Memory Caching

A memory cache, in some cases called a cache store or RAM cache, is a portion of memory made of high-speed static RAM (SRAM) rather than the increasingly slow RAM (DRAM) used for fundamental memory. Memory reserving is successful because most programs access the same data or instructions over and over. By keeping however much of this information as could reasonably be expected in SRAM, the computer avoids accessing the more slow DRAM..

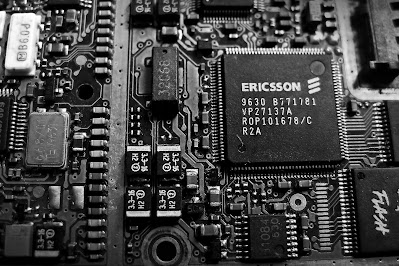

L1 and L2 Caches

Some memory caches are built with the architecture of microprocessors. The Intel 80486 microprocessor, for instance, contains a 8K memory cache, and the Pentium has a 16K cache. Such internal caches are often called Level 1 (L1) caches. Most current PCs additionally accompany outside cache memory, called Level 2 (L2) caches. These caches sit between the CPU and the DRAM. Like L1 caches, L2 caches are made out of SRAM yet they are a lot bigger.

Learn more:Top 5 Super Hit Tech.......

Disk Caching

Disk Caching works under a similar rule as memory storing, however as opposed to using High-speed SRAM, a disk cache uses traditional principle memory. The most as of late accessed data from the disk (as well as adjacent sectors) is stored in a memory cushion. At the point when a program needs to access data from the circle, it first checks the plate cache to check whether the data is there. Circle reserving can significantly improve the performance of applications, because accessing a byte of data in RAM can be thousands of times faster than accessing a byte on a hard plate.

Smart Caching

At the point when data is found in the cache, it is called a cache hit, and the adequacy of a cache is decided by its hit rate. Many cache systems use a procedure known as smart caching, in which the framework can perceive specific sorts of often utilized data. The methodologies for figuring out which information ought to be kept in the cache comprise a portion of the more fascinating issues in computer science.

0 comments:

Post a Comment

Comment for More